Day12

Definition A pushdown

automaton (PDA) is specified by a \(6\)-tuple \((Q,

\Sigma, \Gamma, \delta, q_0, F)\) where \(Q\) is the finite set of states, \(\Sigma\) is the input alphabet, \(\Gamma\) is the stack alphabet, \[\delta: Q \times

\Sigma_\varepsilon \times \Gamma_\varepsilon \to \mathcal{P}( Q \times

\Gamma_\varepsilon)\] is the transition function, \(q_0 \in Q\) is the start state, \(F \subseteq Q\) is the set of accept

states.

For the PDA state diagrams below, \(\Sigma

= \{0,1\}\).

| Mathematical description of

language |

State diagram of PDA recognizing

language |

|

\(\Gamma = \{

\$, \#\}\) |

|

|

|

|

|

|

|

|

|

\(\Gamma = \{

\sun, 1\}\) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| \(\{ 0^i 1^j

0^k \mid i,j,k \geq 0 \}\) |

|

|

|

|

|

Note: alternate notation is to replace \(;\) with \(\to\) on arrow labels.

Corollary: for each language \(L\)

over \(\Sigma\), if there is an NFA

\(N\) with \(L(N)=L\) then there is a PDA \(M\) with \(L(M) =

L\)

Proof idea: Declare stack alphabet to be \(\Gamma = \Sigma\) and then don’t use stack

at all.

Big picture: PDAs are motivated by wanting to

add some memory of unbounded size to NFA. How do we accomplish a similar

enhancement of regular expressions to get a syntactic model that is more

expressive?

DFA, NFA, PDA: Machines process one input string at a time; the

computation of a machine on its input string reads the input from left

to right.

Regular expressions: Syntactic descriptions of all strings that match

a particular pattern; the language described by a regular expression is

built up recursively according to the expression’s syntax

Context-free grammars: Rules to produce

one string at a time, adding characters from the middle, beginning, or

end of the final string as the derivation proceeds.

Day14

Theorem 2.20: A language is generated

by some context-free grammar if and only if it is recognized by some

push-down automaton.

Definition: a language is called

context-free if it is the language

generated by a context-free grammar. The class of all context-free

language over a given alphabet \(\Sigma\) is called

CFL.

Consequences:

Quick proof that every regular language is context free

To prove closure of the class of context-free languages under a

given operation, we can choose either of two modes of proof (via CFGs or

PDAs) depending on which is easier

To fully specify a PDA we could give its \(6\)-tuple formal definition or we could

give its input alphabet, stack alphabet, and state diagram. An informal

description of a PDA is a step-by-step description of how its

computations would process input strings; the reader should be able to

reconstruct the state diagram or formal definition precisely from such a

descripton. The informal description of a PDA can refer to some common

modules or subroutines that are computable by PDAs:

PDAs can “test for emptiness of stack” without providing details.

How? We can always push a special end-of-stack

symbol, \(\$\), at the start, before

processing any input, and then use this symbol as a flag.

PDAs can “test for end of input” without providing details.

How? We can transform a PDA to one where accepting

states are only those reachable when there are no more input

symbols.

Suppose \(L_1\) and \(L_2\) are context-free languages over \(\Sigma\).

Goal: \(L_1 \cup

L_2\) is also context-free.

Approach 1: with PDAs

Let \(M_1 = ( Q_1, \Sigma, \Gamma_1,

\delta_1, q_1, F_1)\) and \(M_2 = (

Q_2, \Sigma, \Gamma_2, \delta_2, q_2, F_2)\) be PDAs with \(L(M_1) = L_1\) and \(L(M_2) = L_2\).

Define \(M =\)

Approach 2: with CFGs

Let \(G_1 = (V_1, \Sigma, R_1,

S_1)\) and \(G_2 = (V_2, \Sigma, R_2,

S_2)\) be CFGs with \(L(G_1)

= L_1\) and \(L(G_2) =

L_2\).

Define \(G =\)

Suppose \(L_1\) and \(L_2\) are context-free languages over \(\Sigma\).

Goal: \(L_1

\circ L_2\) is also context-free.

Approach 1: with PDAs

Let \(M_1 = ( Q_1, \Sigma, \Gamma_1,

\delta_1, q_1, F_1)\) and \(M_2 = (

Q_2, \Sigma, \Gamma_2, \delta_2, q_2, F_2)\) be PDAs with \(L(M_1) = L_1\) and \(L(M_2) = L_2\).

Define \(M =\)

Approach 2: with CFGs

Let \(G_1 = (V_1, \Sigma, R_1,

S_1)\) and \(G_2 = (V_2, \Sigma, R_2,

S_2)\) be CFGs with \(L(G_1)

= L_1\) and \(L(G_2) =

L_2\).

Define \(G =\)

Summary

Over a fixed alphabet \(\Sigma\), a

language \(L\) is

regular

iff it is described by some regular expression

iff it is recognized by some DFA

iff it is recognized by some NFA

Over a fixed alphabet \(\Sigma\), a

language \(L\) is

context-free

iff it is generated by some CFG

iff it is recognized by some PDA

Fact: Every regular language is a

context-free language.

Fact: There are context-free languages

that are nonregular.

Fact: There are countably many regular

languages.

Fact: There are countably infinitely

many context-free languages.

Consequence: Most languages are

not context-free!

Examples of non-context-free

languages

\[\begin{align*}

&\{ a^n b^n c^n \mid 0 \leq n , n \in \mathbb{Z}\}\\

&\{ a^i b^j c^k \mid 0 \leq i \leq j \leq k , i \in \mathbb{Z},

j \in \mathbb{Z}, k \in \mathbb{Z}\}\\

&\{ ww \mid w \in \{0,1\}^* \}

\end{align*}\] (Sipser Ex 2.36, Ex 2.37, 2.38)

There is a Pumping Lemma for CFL that can be used to prove a specific

language is non-context-free: If \(A\)

is a context-free language, there is a number \(p\) where, if \(s\) is any string in \(A\) of length at least \(p\), then \(s\) may be divided into five pieces \(s = uvxyz\) where (1) for each \(i \geq 0\), \(uv^ixy^iz \in A\), (2) \(|uv|>0\), (3) \(|vxy| \leq p\). We will not go

into the details of the proof or application of Pumping Lemma for CFLs

this quarter.

Recall: A set \(X\) is said to be

closed under an operation \(OP\) if, for any elements in \(X\), applying \(OP\) to them gives an element in \(X\).

| True/False |

Closure claim |

| True |

The set of integers is closed under

multiplication. |

|

\(\forall x

\forall y \left( ~(x \in \mathbb{Z} \wedge y \in \mathbb{Z})\to xy \in

\mathbb{Z}~\right)\) |

| True |

For each set \(A\), the power set of \(A\) is closed under intersection. |

|

\(\forall A_1

\forall A_2 \left( ~(A_1 \in \mathcal{P}(A) \wedge A_2 \in

\mathcal{P}(A) \in \mathbb{Z}) \to A_1 \cap A_2 \in

\mathcal{P}(A)~\right)\) |

|

The class of regular languages over \(\Sigma\) is closed under

complementation. |

|

|

|

The class of regular languages over \(\Sigma\) is closed under union. |

|

|

|

The class of regular languages over \(\Sigma\) is closed under intersection. |

|

|

|

The class of regular languages over \(\Sigma\) is closed under

concatenation. |

|

|

|

The class of regular languages over \(\Sigma\) is closed under Kleene star. |

|

|

|

The class of context-free languages over

\(\Sigma\) is closed under

complementation. |

|

|

|

The class of context-free languages over

\(\Sigma\) is closed under union. |

|

|

|

The class of context-free languages over

\(\Sigma\) is closed under

intersection. |

|

|

|

The class of context-free languages over

\(\Sigma\) is closed under

concatenation. |

|

|

|

The class of context-free languages over

\(\Sigma\) is closed under Kleene

star. |

|

|

Day11

Regular sets are not the end of the story

Many nice / simple / important sets are not regular

Limitation of the finite-state automaton model: Can’t “count",

Can only remember finitely far into the past, Can’t backtrack, Must make

decisions in “real-time"

We know actual computers are more powerful than this

model...

The next model of computation. Idea:

allow some memory of unbounded size. How?

To generalize regular expressions: context-free

grammars

To generalize NFA: Pushdown

automata, which is like an NFA with access to a stack:

Number of states is fixed, number of entries in stack is unbounded. At

each step (1) Transition to new state based on current state, letter

read, and top letter of stack, then (2) (Possibly) push or pop a letter

to (or from) top of stack. Accept a string iff there is some sequence of

states and some sequence of stack contents which helps the PDA processes

the entire input string and ends in an accepting state.

Is there a PDA that recognizes the nonregular language \(\{0^n1^n \mid n \geq 0 \}\)?

The PDA with state diagram above can be informally described as:

Read symbols from the input. As each 0 is read, push it onto the

stack. As soon as 1s are seen, pop a 0 off the stack for each 1 read. If

the stack becomes empty and we are at the end of the input string,

accept the input. If the stack becomes empty and there are 1s left to

read, or if 1s are finished while the stack still contains 0s, or if any

0s appear in the string following 1s, reject the input.

Trace a computation of this PDA on the input string \(01\).

Extra practice: Trace the computations of this

PDA on the input string \(011\).

A PDA recognizing the set \(\{ \hspace{1.5

in} \}\) can be informally described as:

Read symbols from the input. As each 0 is read, push it onto the

stack. As soon as 1s are seen, pop a 0 off the stack for each 1 read. If

the stack becomes empty and there is exactly one 1 left to read, read

that 1 and accept the input. If the stack becomes empty and there are

either zero or more than one 1s left to read, or if the 1s are finished

while the stack still contains 0s, or if any 0s appear in the input

following 1s, reject the input.

Modify the state diagram below to get a PDA that implements this

description:

Day15

We are ready to introduce a formal model that will capture a notion

of general purpose computation.

Similar to DFA, NFA, PDA: input will be an

arbitrary string over a fixed alphabet.

Different from NFA, PDA: machine is

deterministic.

Different from DFA, NFA, PDA: read-write

head can move both to the left and to the right, and can extend to the

right past the original input.

Similar to DFA, NFA, PDA: transition

function drives computation one step at a time by moving within a finite

set of states, always starting at designated start state.

Different from DFA, NFA, PDA: the special

states for rejecting and accepting take effect immediately.

(See more details: Sipser p. 166)

Formally: a Turing machine is \(M= (Q,

\Sigma, \Gamma, \delta, q_0, q_{accept}, q_{reject})\) where

\(\delta\) is the

transition function \[\delta: Q\times \Gamma \to Q \times \Gamma \times

\{L, R\}\] The computation of \(M\) on a string \(w\) over \(\Sigma\) is:

Read/write head starts at leftmost position on tape.

Input string is written on \(|w|\)-many leftmost cells of tape, rest of

the tape cells have the blank symbol. Tape

alphabet is \(\Gamma\)

with \(\textvisiblespace\in \Gamma\)

and \(\Sigma \subseteq \Gamma\). The

blank symbol \(\textvisiblespace \notin

\Sigma\).

Given current state of machine and current symbol being read at

the tape head, the machine transitions to next state, writes a symbol to

the current position of the tape head (overwriting existing symbol), and

moves the tape head L or R (if possible).

Computation ends if and when

machine enters either the accept or the reject state. This is called

halting. Note: \(q_{accept} \neq q_{reject}\).

The language recognized by the Turing

machine \(M\), is \(L(M) = \{ w \in \Sigma^* \mid w \textrm{ is

accepted by } M\}\), which is defined as \[\{ w \in \Sigma^* \mid \textrm{computation of $M$

on $w$ halts after entering the accept state}\}\]

2

Formal definition:

Sample computation:

| \(q0\downarrow\) |

|

| \(0\) |

\(0\) |

\(0\) |

\(\textvisiblespace\) |

\(\textvisiblespace\) |

\(\textvisiblespace\) |

\(\textvisiblespace\) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The language recognized by this machine is …

Describing Turing machines (Sipser p.

185) To define a Turing machine, we could give a

Formal definition: the \(7\)-tuple of parameters including set of

states, input alphabet, tape alphabet, transition function, start state,

accept state, and reject state; or,

Implementation-level definition:

English prose that describes the Turing machine head movements relative

to contents of tape, and conditions for accepting / rejecting based on

those contents.

High-level description: description

of algorithm (precise sequence of instructions), without implementation

details of machine. As part of this description, can “call" and run

another TM as a subroutine.

Fix \(\Sigma = \{0,1\}\), \(\Gamma = \{ 0, 1, \textvisiblespace\}\) for

the Turing machines with the following state diagrams:

Example of string accepted:

Example of string rejected:

Implementation-level description

High-level description

Example of string accepted:

Example of string rejected:

Implementation-level description

High-level description

Example of string accepted:

Example of string rejected:

Implementation-level description

High-level description

Example of string accepted:

Example of string rejected:

Implementation-level description

High-level description

Day16

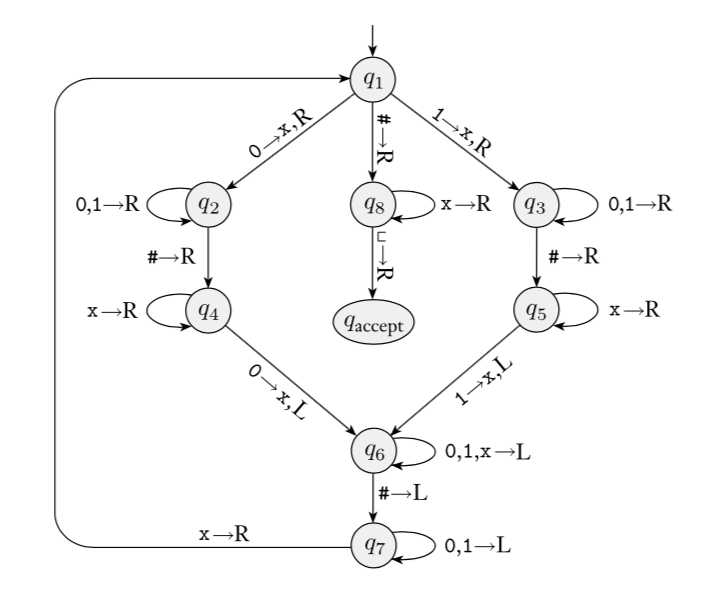

Sipser Figure 3.10

Conventions in state diagram of TM:

\(b \to R\) label means \(b \to b, R\) and all arrows missing from

diagram represent transitions with output \((q_{reject}, \textvisiblespace , R)\)

2

Implementation level description of this machine:

Zig-zag across tape to corresponding positions on either side of

\(\#\) to check whether the characters

in these positions agree. If they do not, or if there is no \(\#\), reject. If they do, cross them

off.

Once all symbols to the left of the \(\#\) are crossed off, check for any

un-crossed-off symbols to the right of \(\#\); if there are any, reject; if there

aren’t, accept.

The language recognized by this machine is \[\{ w \# w \mid w \in \{0,1\}^* \}\]

Computation on input string \(01\#01\)

| \(q_1

\downarrow\) |

|

| \(0\) |

\(1\) |

\(\#\) |

\(0\) |

\(1\) |

\(\textvisiblespace\) |

\(\textvisiblespace\) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 High-level description of this machine is

Recall: High-level descriptions of Turing

machine algorithms are written as indented text within quotation marks.

Stages of the algorithm are typically numbered consecutively. The first

line specifies the input to the machine, which must be a string.

Extra practice

Computation on input string \(01\#1\)

| \(q_1\downarrow\) |

|

| \(0\) |

\(1\) |

\(\#\) |

\(1\) |

\(\textvisiblespace\) |

\(\textvisiblespace\) |

\(\textvisiblespace\) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

A language \(L\) is

recognized by a Turing machine \(M\) means

A Turing machine \(M\)

recognizes a language \(L\) means

A Turing machine \(M\) is a

decider means

A language \(L\) is

decided by a Turing machine \(M\) means

A Turing machine \(M\)

decides a language \(L\) means

Fix \(\Sigma = \{0,1\}\), \(\Gamma = \{ 0, 1, \textvisiblespace\}\) for

the Turing machines with the following state diagrams:

|

|

| Decider? Yes / No |

Decider? Yes / No |

|

|

|

|

| Decider? Yes / No |

Decider? Yes / No |

|

|

Day17

A Turing-recognizable language is a set

of strings that is the language recognized by some Turing machine. We

also say that such languages are recognizable.

A Turing-decidable language is a set of

strings that is the language recognized by some decider. We also say

that such languages are decidable.

An unrecognizable language is a

language that is not Turing-recognizable.

An undecidable language is a language

that is not Turing-decidable.

True or

False: Any decidable language is also

recognizable.

True or

False: Any recognizable language is also

decidable.

True or

False: Any undecidable language is also

unrecognizable.

True or

False: Any unrecognizable language is also

undecidable.

True or

False: The class of Turing-decidable

languages is closed under complementation.

Using formal definition:

Using high-level description:

Church-Turing Thesis (Sipser p. 183):

The informal notion of algorithm is formalized completely and correctly

by the formal definition of a Turing machine. In other words: all

reasonably expressive models of computation are equally expressive with

the standard Turing machine.

Day18

Definition: A language \(L\) over an

alphabet \(\Sigma\) is called

co-recognizable if its complement, defined

as \(\Sigma^* \setminus L = \{

x \in \Sigma^* \mid x \notin L \}\), is

Turing-recognizable.

Notation: The complement of a set \(X\) is denoted with a superscript \(c\), \(X^c\), or an overline, \(\overline{X}\).

Theorem (Sipser Theorem 4.22): A

language is Turing-decidable if and only if both it and its complement

are Turing-recognizable.

Proof, first direction: Suppose

language \(L\) is Turing-decidable. WTS

that both it and its complement are Turing-recognizable.

Proof, second direction: Suppose

language \(L\) is Turing-recognizable,

and so is its complement. WTS that \(L\) is Turing-decidable.

Dovetailing: interleaving progress on

multiple computations by limiting the number of steps each computation

makes in each round.

Claim: If two languages (over a fixed

alphabet \(\Sigma\)) are

Turing-decidable, then their union is as well.

Proof:

Claim: If two languages (over a fixed

alphabet \(\Sigma\)) are

Turing-recognizable, then their union is as well.

Proof:

Day7

Review: The language recognized by the

NFA over \(\{a,b\}\) with state

diagram

is:

So far, we know:

The collection of languages that are each recognizable by a DFA

is closed under complementation.

Could we do the same construction with

NFA?

The collection of languages that are each recognizable by a NFA

is closed under union.

Could we do the same construction with

DFA?

Happily, though, an analogous claim is true!

Suppose \(A_1, A_2\) are languages

over an alphabet \(\Sigma\).

Claim: if there is a DFA \(M_1\) such that \(L(M_1) = A_1\) and DFA \(M_2\) such that \(L(M_2) = A_2\), then there is another DFA,

let’s call it \(M\), such that \(L(M) = A_1 \cup A_2\). Theorem

1.25 in Sipser, page 45

Proof idea:

Formal construction:

Example: When \(A_1 = \{w \mid w~\text{has an $a$ and ends in $b$}

\}\) and \(A_2 = \{ w \mid w~\text{is

of even length} \}\).

Suppose \(A_1, A_2\) are languages

over an alphabet \(\Sigma\).

Claim: if there is a DFA \(M_1\) such that \(L(M_1) = A_1\) and DFA \(M_2\) such that \(L(M_2) = A_2\), then there is another DFA,

let’s call it \(M\), such that \(L(M) = A_1 \cap A_2\). Footnote

to Sipser Theorem 1.25, page 46

Proof idea:

Formal construction:

Day8

So far we have that:

If there is a DFA recognizing a language, there is a DFA

recognizing its complement.

If there are NFA recognizing two languages, there is a NFA

recognizing their union.

If there are DFA recognizing two languages, there is a DFA

recognizing their union.

If there are DFA recognizing two languages, there is a DFA

recognizing their intersection.

Our goals for today are (1) prove similar results about other set

operations, (2) prove that NFA and DFA are equally expressive, and

therefore (3) define an important class of languages.

Suppose \(A_1, A_2\) are languages

over an alphabet \(\Sigma\).

Claim: if there is a NFA \(N_1\) such that \(L(N_1) = A_1\) and NFA \(N_2\) such that \(L(N_2) = A_2\), then there is another NFA,

let’s call it \(N\), such that \(L(N) = A_1 \circ A_2\).

Proof idea: Allow computation to move

between \(N_1\) and \(N_2\) “spontaneously" when reach an

accepting state of \(N_1\), guessing

that we’ve reached the point where the two parts of the string in the

set-wise concatenation are glued together.

Formal construction: Let \(N_1 = (Q_1, \Sigma, \delta_1, q_1, F_1)\)

and \(N_2 = (Q_2, \Sigma, \delta_2,q_2,

F_2)\) and assume \(Q_1 \cap Q_2 =

\emptyset\). Construct \(N = (Q,

\Sigma, \delta, q_0, F)\) where

\(Q =\)

\(q_0 =\)

\(F =\)

\(\delta: Q \times \Sigma_\varepsilon

\to \mathcal{P}(Q)\) is defined by, for \(q \in Q\) and \(a

\in \Sigma_{\varepsilon}\): \[\delta((q,a))=\begin{cases}

\delta_1 ((q,a)) &\qquad\text{if } q\in Q_1 \textrm{

and } q \notin F_1\\

\delta_1 ((q,a)) &\qquad\text{if } q\in F_1 \textrm{

and } a \in \Sigma\\

\delta_1 ((q,a)) \cup \{q_2\} &\qquad\text{if } q\in

F_1 \textrm{ and } a = \varepsilon\\

\delta_2 ((q,a)) &\qquad\text{if } q\in Q_2

\end{cases}\]

Proof of correctness would prove that \(L(N) = A_1 \circ A_2\) by considering an

arbitrary string accepted by \(N\),

tracing an accepting computation of \(N\) on it, and using that trace to prove

the string can be written as the result of concatenating two strings,

the first in \(A_1\) and the second in

\(A_2\); then, taking an arbitrary

string in \(A_1 \circ A_2\) and proving

that it is accepted by \(N\). Details

left for extra practice.

Application: A state diagram for a NFA

over \(\Sigma = \{a,b\}\) that

recognizes \(L ( a^*b)\):

Suppose \(A\) is a language over an

alphabet \(\Sigma\).

Claim: if there is a NFA \(N\) such that \(L(N) = A\), then there is another NFA,

let’s call it \(N'\), such that

\(L(N') = A^*\).

Proof idea: Add a fresh start state,

which is an accept state. Add spontaneous moves from each (old) accept

state to the old start state.

Formal construction: Let \(N = (Q, \Sigma, \delta, q_1, F)\) and

assume \(q_0 \notin Q\). Construct

\(N' = (Q', \Sigma, \delta', q_0,

F')\) where

\(Q' = Q \cup

\{q_0\}\)

\(F' = F \cup

\{q_0\}\)

\(\delta': Q' \times

\Sigma_\varepsilon \to \mathcal{P}(Q')\) is defined by, for

\(q \in Q'\) and \(a \in \Sigma_{\varepsilon}\): \[\delta'((q,a))=\begin{cases}

\delta ((q,a)) &\qquad\text{if } q\in Q \textrm{ and

} q \notin F\\

\delta ((q,a)) &\qquad\text{if } q\in F \textrm{ and

} a \in \Sigma\\

\delta ((q,a)) \cup \{q_1\} &\qquad\text{if } q\in F

\textrm{ and } a = \varepsilon\\

\{q_1\} &\qquad\text{if } q = q_0 \textrm{ and } a =

\varepsilon \\

\emptyset &\qquad\text{if } q = q_0 \textrm { and }

a \in \Sigma

\end{cases}\]

Proof of correctness would prove that \(L(N') = A^*\) by considering an

arbitrary string accepted by \(N'\), tracing an accepting computation

of \(N'\) on it, and using that

trace to prove the string can be written as the result of concatenating

some number of strings, each of which is in \(A\); then, taking an arbitrary string in

\(A^*\) and proving that it is accepted

by \(N'\). Details left for extra

practice.

Application: A state diagram for a NFA

over \(\Sigma = \{a,b\}\) that

recognizes \(L (( a^*b)^* )\):

Suppose \(A\) is a language over an

alphabet \(\Sigma\).

Claim: if there is a NFA \(N\) such that \(L(N) = A\) then there is a DFA \(M\) such that \(L(M) = A\).

Proof idea: States in \(M\) are “macro-states" – collections of

states from \(N\) – that represent the

set of possible states a computation of \(N\) might be in.

Formal construction: Let \(N = (Q, \Sigma, \delta, q_0, F)\). Define

\[M = (~ \mathcal{P}(Q), \Sigma, \delta',

q', \{ X \subseteq Q \mid X \cap F \neq \emptyset \}~ )\]

where \(q' = \{ q \in Q \mid \text{$q =

q_0$ or is accessible from $q_0$ by spontaneous moves in $N$}

\}\) and \[\delta' (~(X, x)~) = \{

q \in Q \mid q \in \delta( ~(r,x)~) ~\text{for some $r \in X$ or is

accessible

from such an $r$ by spontaneous moves in $N$} \}\]

Consider the state diagram of an NFA over \(\{a,b\}\). Use the “macro-state”

construction to find an equivalent DFA.

Consider the state diagram of an NFA over \(\{0,1\}\). Use the “macro-state”

construction to find an equivalent DFA.

Note: We can often prune the DFAs that result from the “macro-state”

constructions to get an equivalent DFA with fewer states (e.g. only the

“macro-states" reachable from the start state).

The class of regular languages

Fix an alphabet \(\Sigma\). For each

language \(L\) over \(\Sigma\):

| There is a DFA over \(\Sigma\) that recognizes \(L\) |

\(\exists M

~(M \textrm{ is a DFA and } L(M) = A)\) |

| if and only

if |

|

| There is a NFA over \(\Sigma\) that recognizes \(L\) |

\(\exists N

~(N \textrm{ is a NFA and } L(N) = A)\) |

| if and only

if |

|

| There is a regular

expression over \(\Sigma\) that

describes \(L\) |

\(\exists R

~(R \textrm{ is a regular expression and } L(R) = A)\) |

A language is called regular when any

(hence all) of the above three conditions are met.

We already proved that DFAs and NFAs are equally expressive. It

remains to prove that regular expressions are too.

Part 1: Suppose \(A\) is a language

over an alphabet \(\Sigma\). If there

is a regular expression \(R\) such that

\(L(R) = A\), then there is a NFA,

let’s call it \(N\), such that \(L(N) = A\).

Structural induction: Regular

expression is built from basis regular expressions using inductive steps

(union, concatenation, Kleene star symbols). Use constructions to mirror

these in NFAs.

Application: A state diagram for a NFA

over \(\{a,b\}\) that recognizes \(L(a^* (ab)^*)\):

Part 2: Suppose \(A\) is a language

over an alphabet \(\Sigma\). If there

is a DFA \(M\) such that \(L(M) = A\), then there is a regular

expression, let’s call it \(R\), such

that \(L(R) = A\).

Proof idea: Trace all possible paths

from start state to accept state. Express labels of these paths as

regular expressions, and union them all.

Add new start state with \(\varepsilon\) arrow to old start

state.

Add new accept state with \(\varepsilon\) arrow from old accept states.

Make old accept states non-accept.

Remove one (of the old) states at a time: modify regular

expressions on arrows that went through removed state to restore

language recognized by machine.

Application: Find a regular expression

describing the language recognized by the DFA with state diagram

Day4

**This definition was in the pre-class reading** A finite automaton

(FA) is specified by \(M = (Q, \Sigma, \delta,

q_0, F)\). This \(5\)-tuple is

called the formal definition of the FA.

The FA can also be represented by its state diagram: with nodes for the

state, labelled edges specifying the transition function, and

decorations on nodes denoting the start and accept states.

Finite set of states \(Q\) can be

labelled by any collection of distinct names. Often we use default state

labels \(q0, q1, \ldots\)

The alphabet \(\Sigma\) determines

the possible inputs to the automaton. Each input to the automaton is a

string over \(\Sigma\), and the

automaton “processes” the input one symbol (or character) at a time.

The transition function \(\delta\)

gives the next state of the automaton based on the current state of the

machine and on the next input symbol.

The start state \(q_0\) is an

element of \(Q\). Each computation of

the machine starts at the start state.

The accept (final) states \(F\) form

a subset of the states of the automaton, \(F

\subseteq Q\). These states are used to flag if the machine

accepts or rejects an input string.

The computation of a machine on an input string is a sequence of

states in the machine, starting with the start state, determined by

transitions of the machine as it reads successive input symbols.

The finite automaton \(M\) accepts

the given input string exactly when the computation of \(M\) on the input string ends in an accept

state. \(M\) rejects the given input

string exactly when the computation of \(M\) on the input string ends in a nonaccept

state, that is, a state that is not in \(F\).

The language of \(M\), \(L(M)\), is defined as the set of all

strings that are each accepted by the machine \(M\). Each string that is rejected by \(M\) is not in \(L(M)\). The language of \(M\) is also called the language recognized

by \(M\).

What is finite about all finite

automata? (Select all that apply)

The size of the machine (number of states, number of

arrows)

The length of each computation of the machine

The number of strings that are accepted by the machine

The formal definition of this FA is

Classify each string \(a, aa, ab, ba, bb,

\varepsilon\) as accepted by the FA or rejected by the FA.

Why are these the only two options?

The language recognized by this automaton is

The language recognized by this automaton is

The language recognized by this automaton is

Day5

Review: Formal definition of DFA: \(M = (Q, \Sigma, \delta, q_0, F)\)

2

Finite set of states \(Q\)

Alphabet \(\Sigma\)

Transition function \(\delta\)

Start state \(q_0\)

Accept (final) states \(F\)

Quick check: In the state diagram of \(M\), how many outgoing arrows are there

from each state?

Note: We’ll see a new kind of finite

automaton. It will be helpful to distinguish it from the machines we’ve

been talking about so we’ll use Deterministic Finite

Automaton (DFA) to refer to the machines from Section

1.1.

\(M = ( \{ q0, q1, q2\}, \{a,b\}, \delta,

q0, \{q0\} )\) where \(\delta\)

is (rows labelled by states and columns labelled by symbols):

| \(\delta\) |

\(a\) |

\(b\) |

| \(q0\) |

\(q1\) |

\(q1\) |

| \(q1\) |

\(q2\) |

\(q2\) |

| \(q2\) |

\(q0\) |

\(q0\) |

The state diagram for \(M\) is

Give two examples of strings that are accepted by \(M\) and two examples of strings that are

rejected by \(M\):

A regular expression describing \(L(M)\) is

A state diagram for a finite automaton recognizing \[\{w \mid w~\text{is a string over $\{a,b\}$ whose

length is not a multiple of $3$} \}\]

Extra example: Let \(n\) be an

arbitrary positive integer. What is a formal definition for a finite

automaton recognizing \[\{w \mid w~\text{is a

string over $\{0,1\}$ whose length is not a multiple of $n$}

\}?\]

Consider the alphabet \(\Sigma_1 =

\{0,1\}\).

A state diagram for a finite automaton that recognizes \(\{w \mid w~\text{contains at most two $1$'s}

\}\) is

A state diagram for a finite automaton that recognizes \(\{w \mid w~\text{contains more than two $1$'s}

\}\) is

Strategy: Add “labels" for states in the state

diagram, e.g. “have not seen any of desired pattern yet” or “sink

state”. Then, we can use the analysis of the roles of the states in the

state diagram to work towards a description of the language recognized

by the finite automaton.

Or: decompose the language to a simpler one that we already know how

to recognize with a DFA or NFA.

Textbook Exercise 1.14: Suppose \(A\) is a language over an alphabet \(\Sigma\). If there is a DFA \(M\) such that \(L(M) = A\) then there is another DFA, let’s

call it \(M'\), such that \(L(M') = \overline{A}\), the complement

of \(A\), defined as \(\{ w \in \Sigma^* \mid w \notin A \}\).

Proof idea:

A useful bit of terminology: the iterated transition

function of a finite automaton \(M = (Q, \Sigma, \delta, q_0, F)\) is

defined recursively by \[\delta^* (~(q,w)~)

=\begin{cases}

q \qquad &\text{if $q \in Q, w = \varepsilon$} \\

\delta( ~(q,a)~) \qquad &\text{if $q \in Q$, $w = a \in \Sigma$ } \\

\delta(~(\delta^*(~(q,u)~), a) ~) \qquad &\text{if $q \in Q$, $w =

ua$ where $u \in \Sigma^*$ and $a \in \Sigma$}

\end{cases}\]

Using this terminology, \(M\)

accepts a string \(w\) over \(\Sigma\) if and only if \(\delta^*( ~(q_0,w)~) \in F\).

Proof:

Day6

| Nondeterministic

finite automaton (Sipser Page 53) Given as \(M = (Q, \Sigma, \delta, q_0, F)\) |

|

|

| Finite set of states \(Q\) |

Can be labelled by any collection of

distinct names. Default: \(q0, q1,

\ldots\) |

| Alphabet \(\Sigma\) |

Each input to the automaton is a string

over \(\Sigma\). |

| Arrow labels \(\Sigma_\varepsilon\) |

\(\Sigma_\varepsilon = \Sigma \cup \{

\varepsilon\}\). |

|

Arrows in the state diagram are labelled

either by symbols from \(\Sigma\) or by

\(\varepsilon\) |

| Transition function \(\delta\) |

\(\delta: Q

\times \Sigma_{\varepsilon} \to \mathcal{P}(Q)\) gives the

set of possible next states for a

transition |

|

from the current state upon reading a

symbol or spontaneously moving. |

| Start state \(q_0\) |

Element of \(Q\). Each computation of the machine starts

at the start state. |

| Accept (final) states \(F\) |

\(F

\subseteq Q\). |

|

|

| \(M\) accepts the input string \(w \in \Sigma^*\) if and only if

there is a computation of \(M\) on \(w\) that processes the whole string and

ends in an accept state. |

The formal definition of the NFA over \(\{0,1\}\) given by this state diagram

is:

The language over \(\{0,1\}\)

recognized by this NFA is:

Practice: Change the transition function to get

a different NFA which accepts the empty string (and potentially other

strings too).

The state diagram of an NFA over \(\{a,b\}\) is:

The formal definition of this NFA is:

Suppose \(A_1, A_2\) are languages

over an alphabet \(\Sigma\).

Claim: if there is a NFA \(N_1\) such that \(L(N_1) = A_1\) and NFA \(N_2\) such that \(L(N_2) = A_2\), then there is another NFA,

let’s call it \(N\), such that \(L(N) = A_1 \cup A_2\).

Proof idea: Use nondeterminism to

choose which of \(N_1\), \(N_2\) to run.

Formal construction: Let \(N_1 = (Q_1, \Sigma, \delta_1, q_1, F_1)\)

and \(N_2 = (Q_2, \Sigma, \delta_2,q_2,

F_2)\) and assume \(Q_1 \cap Q_2 =

\emptyset\) and that \(q_0 \notin Q_1

\cup Q_2\). Construct \(N = (Q, \Sigma,

\delta, q_0, F_1 \cup F_2)\) where

\(Q =\)

\(\delta: Q \times \Sigma_\varepsilon

\to \mathcal{P}(Q)\) is defined by, for \(q \in Q\) and \(x

\in \Sigma_{\varepsilon}\): \[\phantom{\delta((q,x))=\begin{cases} \delta_1

((q,x)) &\qquad\text{if } q\in Q_1 \\ \delta_2 ((q,x))

&\qquad\text{if } q\in Q_2 \\ \{q1,q2\} &\qquad\text{if } q =

q_0, x = \varepsilon \\ \emptyset\text{if } q= q_0, x \neq \varepsilon

\end{cases}}\]

Proof of correctness would prove that \(L(N) = A_1 \cup A_2\) by considering an

arbitrary string accepted by \(N\),

tracing an accepting computation of \(N\) on it, and using that trace to prove

the string is in at least one of \(A_1\), \(A_2\); then, taking an arbitrary string in

\(A_1 \cup A_2\) and proving that it is

accepted by \(N\). Details left for

extra practice.